AffinityMatcher#

- class torchdr.AffinityMatcher(affinity_in: Affinity, affinity_out: Affinity | None = None, kwargs_affinity_out: Dict | None = None, n_components: int = 2, loss_fn: str = 'square_loss', kwargs_loss: Dict | None = None, optimizer: str | Type[Optimizer] = 'Adam', optimizer_kwargs: Dict | None = None, lr: float = 1.0, scheduler: str | Type[LRScheduler] | None = None, scheduler_kwargs: Dict | None = None, min_grad_norm: float = 1e-07, max_iter: int = 1000, init: str | Tensor | ndarray = 'pca', init_scaling: float = 0.0001, device: str = 'auto', backend: str | FaissConfig | None = None, verbose: bool = False, random_state: float | None = None, check_interval: int = 50, compile: bool = False, encoder: Module | None = None, **kwargs)[source]#

Bases:

DRModuleDimensionality reduction by matching two affinity matrices.

Solves an optimization problem of the form:

\[\min_{\mathbf{Z}} \: \mathcal{L}( \mathbf{P}, \mathbf{Q})\]where \(\mathcal{L}\) is a loss function, \(\mathbf{P}\) is the input affinity matrix and \(\mathbf{Q}\) is the affinity matrix of the embedding.

The embedding is optimized via first-order methods, with gradients computed either through PyTorch autograd or manually (when

_use_closed_form_gradientsisTrue).When an

encoder(neural network) is provided, its parameters are optimized instead of a raw embedding matrix, enabling out-of-sample extension viatransform().- Parameters:

affinity_in (Affinity) – The affinity object for the input space.

affinity_out (Affinity, optional) – The affinity object for the output embedding space. When None, a custom

_compute_loss()method must be implemented.kwargs_affinity_out (dict, optional) – Additional keyword arguments for the affinity_out method.

n_components (int, optional) – Number of dimensions for the embedding. Default is 2.

loss_fn (str, optional) – Loss function for optimization. Default is “square_loss”.

kwargs_loss (dict, optional) – Additional keyword arguments for the loss function.

optimizer (str or torch.optim.Optimizer, optional) – Optimizer name from

torch.optimor an optimizer class. Default is “Adam”.optimizer_kwargs (dict, optional) – Additional keyword arguments for the optimizer.

lr (float or 'auto', optional) – Learning rate. Default is 1e0.

scheduler (str or torch.optim.lr_scheduler.LRScheduler, optional) – Scheduler name from

torch.optim.lr_scheduleror a scheduler class. Default is None (no scheduler).scheduler_kwargs (dict, optional) – Additional keyword arguments for the scheduler.

min_grad_norm (float, optional) – Gradient norm threshold for convergence. Default is 1e-7.

max_iter (int, optional) – Maximum number of iterations. Default is 1000.

init (str, torch.Tensor, or np.ndarray, optional) – Initialization for the embedding. Default is “pca”.

init_scaling (float, optional) – Scaling factor for the initial embedding. Default is 1e-4.

device (str, optional) – Device for computations. Default is “auto”.

backend ({"keops", "faiss", None} or FaissConfig, optional) – Backend for handling sparsity and memory efficiency. Default is None (standard PyTorch).

verbose (bool, optional) – Verbosity. Default is False.

random_state (float, optional) – Random seed for reproducibility. Default is None.

check_interval (int, optional) – Iterations between convergence checks. Default is 50.

compile (bool, default=False) – Whether to use

torch.compilefor faster computation.encoder (torch.nn.Module, optional) – Neural network mapping input data to the embedding space. Output dimension must match

n_components. Default is None (optimize a raw embedding matrix).

Examples using AffinityMatcher:#

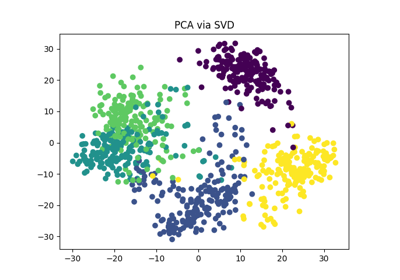

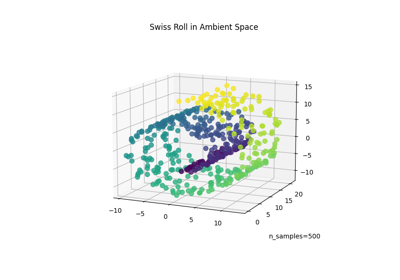

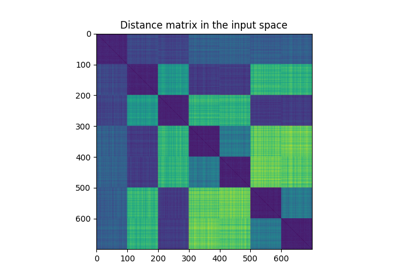

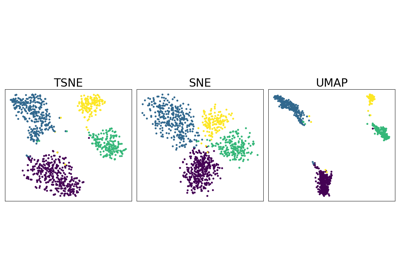

Neighbor Embedding on genomics & equivalent affinity matcher formulation