UMAP#

- class torchdr.UMAP(n_neighbors: float = 30, n_components: int = 2, min_dist: float = 0.1, spread: float = 1.0, a: float | None = None, b: float | None = None, lr: float = 1.0, optimizer: str | Type[Optimizer] = 'SGD', optimizer_kwargs: Dict | str | None = None, scheduler: str | Type[LRScheduler] | None = 'LinearLR', scheduler_kwargs: Dict | str | None = 'auto', init: str = 'pca', init_scaling: float = 0.0001, min_grad_norm: float = 1e-07, max_iter: int = 1000, device: str = 'auto', backend: str | FaissConfig | None = 'faiss', verbose: bool = False, random_state: float | None = None, max_iter_affinity: int = 100, metric: str = 'sqeuclidean', negative_sample_rate: int = 5, check_interval: int = 50, discard_NNs: bool = False, compile: bool = False, distributed: bool | str = 'auto', **kwargs)[source]#

Bases:

NegativeSamplingNeighborEmbeddingUMAP introduced in [McInnes et al., 2018] and further studied in [Damrich and Hamprecht, 2021].

It uses a

UMAPAffinityas input affinity \(\mathbf{P}\) and output affinity \(Q_{ij} = (1 + a \| \mathbf{z}_i - \mathbf{z}_j \|^{2b})^{-1}\) where \(a, b\) are fitted frommin_distandspread.The loss function is defined as:

\[-\sum_{ij} P_{ij} \log Q_{ij} + \sum_{i,j \in \mathrm{Neg}(i)} \log (1 - Q_{ij})\]where \(\mathrm{Neg}(i)\) is the set of negatives samples for point \(i\).

Note

This implementation supports multi-GPU training when launched with

torchrun. Setdistributed='auto'(default) to automatically detect and use multiple GPUs.- Parameters:

n_neighbors (float, optional) – Number of nearest neighbors.

n_components (int, optional) – Dimension of the embedding space.

min_dist (float, optional) – Minimum distance between points in the embedding space.

spread (float, optional) – The effective scale of the embedded points. Used to configure the UMAPAffinityOut.

a (float, optional) – Parameter for the Student t-distribution.

b (float, optional) – Parameter for the Student t-distribution.

lr (float, optional) – Learning rate for the algorithm, by default 1e-1.

optimizer (str or torch.optim.Optimizer, optional) – Name of an optimizer from torch.optim or an optimizer class. Default is “SGD”.

optimizer_kwargs (dict or 'auto', optional) – Additional keyword arguments for the optimizer. Default is ‘auto’. which sets appropriate momentum values for SGD based on early exaggeration phase.

scheduler (str or torch.optim.lr_scheduler.LRScheduler, optional) – Name of a scheduler from torch.optim.lr_scheduler or a scheduler class. Default is “LinearLR”.

scheduler_kwargs (dict, 'auto', or None, optional) – Additional keyword arguments for the scheduler. Default is ‘auto’, which corresponds to a linear decay from the learning rate to 0 for LinearLR.

init ({'normal', 'pca'} or torch.Tensor of shape (n_samples, output_dim), optional) – Initialization for the embedding Z, default ‘pca’.

init_scaling (float, optional) – Scaling factor for the initialization, by default 1e-4.

min_grad_norm (float, optional) – Precision threshold at which the algorithm stops, by default 1e-7.

max_iter (int, optional) – Number of maximum iterations for the descent algorithm. by default 2000.

device (str, optional) – Device to use, by default “auto”.

backend ({"keops", "faiss", None} or FaissConfig, optional) – Which backend to use for handling sparsity and memory efficiency. Can be: - “keops”: Use KeOps for memory-efficient symbolic computations - “faiss”: Use FAISS for fast k-NN computations with default settings - None: Use standard PyTorch operations - FaissConfig object: Use FAISS with custom configuration Default is “faiss”.

verbose (bool, optional) – Verbosity, by default False.

random_state (float, optional) – Random seed for reproducibility, by default None.

max_iter_affinity (int, optional) – Number of maximum iterations for the input affinity computation.

metric ({'euclidean', 'manhattan'}, optional) – Metric to use for the input affinity, by default ‘sqeuclidean’.

n_negatives (int, optional) – Number of negative samples for the noise-contrastive loss, by default 10.

check_interval (int, optional) – Check interval for the algorithm, by default 50.

discard_NNs (bool, optional) – Whether to discard the nearest neighbors from the negative sampling. Default is False.

compile (bool, optional) – Whether to compile the algorithm using torch.compile. Default is False.

distributed (bool or 'auto', optional) – Whether to use distributed computation across multiple GPUs. - “auto”: Automatically detect if running with torchrun (default) - True: Force distributed mode (requires torchrun) - False: Disable distributed mode Default is “auto”.

Examples using UMAP:#

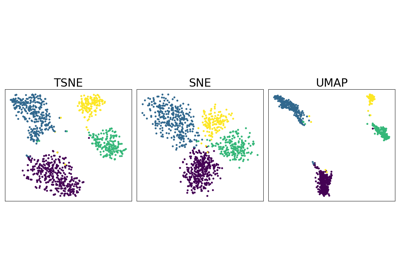

Neighbor Embedding on genomics & equivalent affinity matcher formulation