EntropicAffinity#

- class torchdr.EntropicAffinity(perplexity: float = 30, max_iter: int = 1000, sparsity: bool = True, metric: str = 'sqeuclidean', zero_diag: bool = True, device: str = 'auto', backend: str | FaissConfig | None = None, verbose: bool = False, compile: bool = False, distributed: bool | str = 'auto', _pre_processed: bool = False)[source]#

Bases:

SparseLogAffinitySolve the directed entropic affinity problem introduced in [Hinton and Roweis, 2002].

The algorithm computes the optimal dual variable \(\mathbf{\varepsilon}^* \in \mathbb{R}^n_{>0}\) such that

\[\forall (i,j), \: P^{\mathrm{e}}_{ij} = \frac{\exp(- C_{ij} / \varepsilon_i^\star)}{\sum_{\ell} \exp(- C_{i\ell} / \varepsilon_i^\star)} \quad \text{where} \quad \forall i, \: \mathrm{h}(\mathbf{P}^{\mathrm{e}}_{i:}) = \log (\xi) + 1 \:.\]where :

\(\mathbf{C}\): symmetric pairwise distance matrix between the samples.

\(\xi\): perplexity parameter.

\(\mathrm{h}\): (row-wise) Shannon entropy such that \(\mathrm{h}(\mathbf{p}) = - \sum_{i} p_{i} (\log p_{i} - 1)\).

\(\mathbf{\varepsilon}^*\) is computed by performing one dimensional searches since rows of \(\mathbf{P}^{\mathrm{e}}\) are independent subproblems.

Convex problem. Corresponds to the matrix \(\mathbf{P}^{\mathrm{e}}\) in [Van Assel et al., 2024], solving the convex optimization problem

\[\begin{split}\mathbf{P}^{\mathrm{e}} \in \mathop{\arg\min}_{\mathbf{P} \in \mathbb{R}_+^{n \times n}} \: &\langle \mathbf{C}, \mathbf{P} \rangle \\ \text{s.t.} \quad &\mathbf{P} \mathbf{1} = \mathbf{1} \\ &\forall i, \: \mathrm{h}(\mathbf{P}_{i:}) \geq \log (\xi) + 1 \:.\end{split}\]where \(\mathbf{1} := (1,...,1)^\top\): is the all-ones vector.

The entropic affinity matrix is akin to a soft \(k\) -NN affinity, with the perplexity parameter \(\xi\) acting as \(k\). Each point distributes a unit mass among its closest neighbors while minimizing a transport cost given by \(\mathbf{C}\).

The entropic constraint is saturated at the optimum and governs mass spread. With small \(\xi\), mass concentrates on a few neighbors; with large \(\xi\), it spreads across more neighbors thus capturing larger scales of dependencies.

Note

A symmetric version is also available at

SymmetricEntropicAffinity. It is the affinity matrix used inSNEkhorn/TSNEkhorn[Van Assel et al., 2024]. In TSNE [Van der Maaten and Hinton, 2008], the entropic affinity is simply averaged with its transpose.- Parameters:

perplexity (float, optional) – Perplexity parameter, related to the number of ‘effective’ nearest neighbors. Consider selecting a value between 2 and the number of samples.

max_iter (int, optional) – Number of maximum iterations for the root finding algorithm.

sparsity (bool, optional) – If True, keeps only the 3 * perplexity smallest element on each row of the ground cost matrix. Recommended if perplexity is not too big. Default is True.

metric (str, optional) – Metric to use for computing distances (default “sqeuclidean”).

zero_diag (bool, optional) – Whether to set the diagonal of the distance matrix to 0.

device (str, optional) – Device to use for computation.

backend ({"keops", "faiss", None} or FaissConfig, optional) – Which backend to use for handling sparsity and memory efficiency. Can be: - “keops”: Use KeOps for memory-efficient symbolic computations - “faiss”: Use FAISS for fast k-NN computations with default settings - None: Use standard PyTorch operations - FaissConfig object: Use FAISS with custom configuration Default is None.

verbose (bool, optional) – Verbosity. Default is False.

compile (bool, optional) – If True, use torch compile. Default is False.

distributed (bool or 'auto', optional) – Whether to use distributed computation across multiple GPUs. - “auto”: Automatically detect if running with torchrun (default) - True: Force distributed mode (requires torchrun) - False: Disable distributed mode Default is “auto”.

_pre_processed (bool, optional) – If True, assumes inputs are already torch tensors on the correct device and skips the to_torch conversion. Default is False.

Examples using EntropicAffinity:#

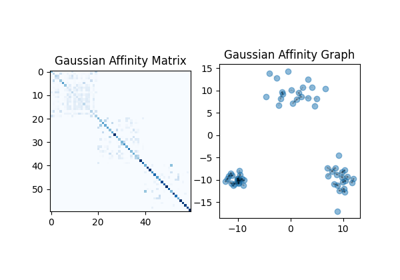

Entropic Affinities can adapt to varying noise levels

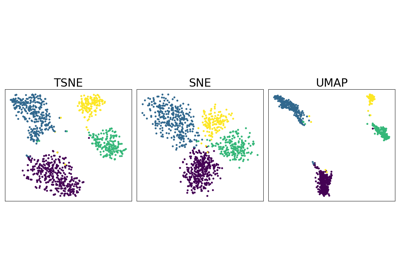

Neighbor Embedding on genomics & equivalent affinity matcher formulation