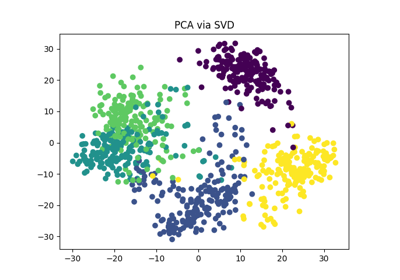

PCA#

- class torchdr.PCA(n_components: int = 2, device: str = 'auto', distributed: str | bool = 'auto', verbose: bool = False, random_state: float | None = None, svd_driver: str | None = None, **kwargs)[source]#

Bases:

DRModulePrincipal Component Analysis module.

- Parameters:

n_components (int, default=2) – Number of components to project the input data onto.

device (str, default="auto") – Device on which the computations are performed.

distributed (str or bool, default="auto") –

Whether to use distributed mode for multi-GPU training.

”auto”: Automatically detect if torch.distributed is initialized and use distributed mode if available.

True: Force distributed mode (requires torch.distributed to be initialized).

False: Disable distributed mode.

In distributed mode, each GPU computes local statistics which are then aggregated using all-reduce operations. This is communication-efficient when the number of samples is much larger than the number of features (n >> d).

verbose (bool, default=False) – Whether to print information during the computations.

random_state (float, default=None) – Random seed for reproducibility.

svd_driver (str, optional) – Name of the cuSOLVER method to be used for torch.linalg.svd. This keyword argument only works on CUDA inputs. Available options are: None, gesvd, gesvdj and gesvda. Defaults to None.

- mean_#

Per-feature empirical mean, calculated from the training set.

- Type:

torch.Tensor of shape (1, n_features)

- components_#

Principal axes in feature space, representing the directions of maximum variance in the data.

- Type:

torch.Tensor of shape (n_components, n_features)

- embedding_#

The transformed data after calling fit_transform.

- Type:

Examples

Standard single-GPU usage:

from torchdr import PCA import torch X = torch.randn(1000, 50) pca = PCA(n_components=10) X_reduced = pca.fit_transform(X)

Multi-GPU distributed usage (launch with torchrun –nproc_per_node=4):

import torch from torchdr import PCA # Each GPU loads its chunk of the data rank = torch.distributed.get_rank() world_size = torch.distributed.get_world_size() chunk_size = len(full_data) // world_size X_local = full_data[rank * chunk_size:(rank + 1) * chunk_size] # Distributed PCA - handles communication automatically pca = PCA(n_components=50, distributed="auto") X_transformed = pca.fit_transform(X_local)

Notes

In distributed mode:

Requires torch.distributed to be initialized (use torchrun or TorchDR CLI)

Automatically uses local_rank for GPU assignment

Each GPU only needs its data chunk in memory

Uses the covariance method: computes X.T @ X locally and aggregates via all-reduce, which has O(d^2) communication cost

- transform(X: Tensor | ndarray) Tensor | ndarray[source]#

Project the input data onto the PCA components.

- Parameters:

X (torch.Tensor or np.ndarray of shape (n_samples, n_features)) – Data to project onto the PCA components.

- Returns:

X_new – Projected data.

- Return type:

torch.Tensor or np.ndarray of shape (n_samples, n_components)